-

-

- English

- العربية

- Español

- Français

- Dutch

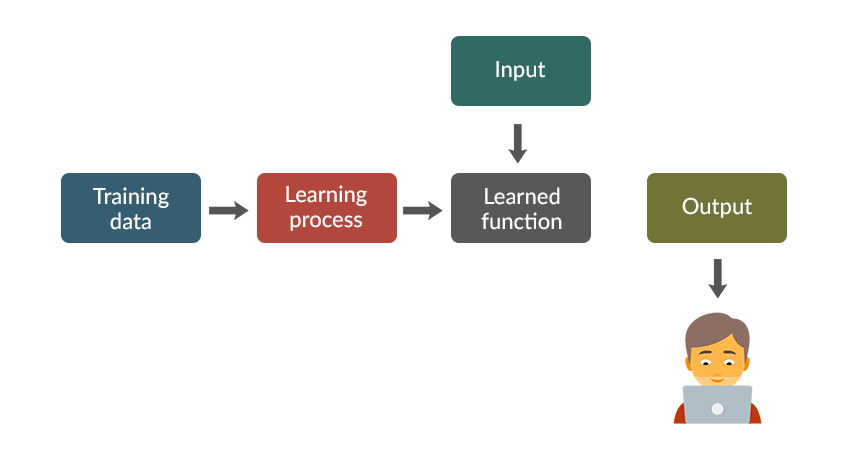

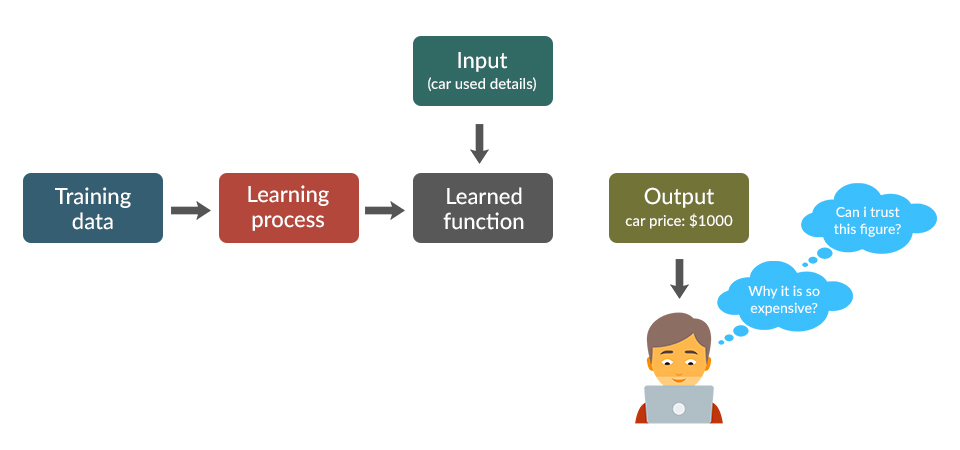

Explainable Artificial Intelligence (XAI): An Introduction

Technology - January 29, 2021

Related stories

Threat vs Vulnerability vs Risk

December 9, 2020Why IAM is an Indispensable Tool?

December 4, 2020What is 5G: All You Need to Know

December 1, 2020How Predictive Analytics can impact your business?

April 08, 2020Artificial Intelligence in the fight against Coronavirus

March 24, 2020Demystifying the myths surrounding Cloud

Technology - March 26, 2020Microsoft Teams vs Slack - Which is Really Better Tool?

October 09, 2019Microsoft Azure RI

March 26, 2020SUBSCRIPTION CENTER

Stay in the Know with Our Newsletter

-